(Part 2) The Work Before Agents: Turning Data Into Context (So AI Can Actually Help)

Jan 15, 2026

(Part 2) The Work Before Agents: Turning Data Into Context (So AI Can Actually Help)

If Part 1 was about reframing “AI” into jobs-to-be-done, then the natural follow-up question I keep getting is:

“Okay, so how do we actually get there?”

This is where I think a lot of teams get tripped up. Not because they don’t have data. (Again, teams are not data starved.) And not because they aren’t excited about AI. But because they’re trying to jump from raw information to autonomous action without building the layer in the middle that makes AI useful in the real world.

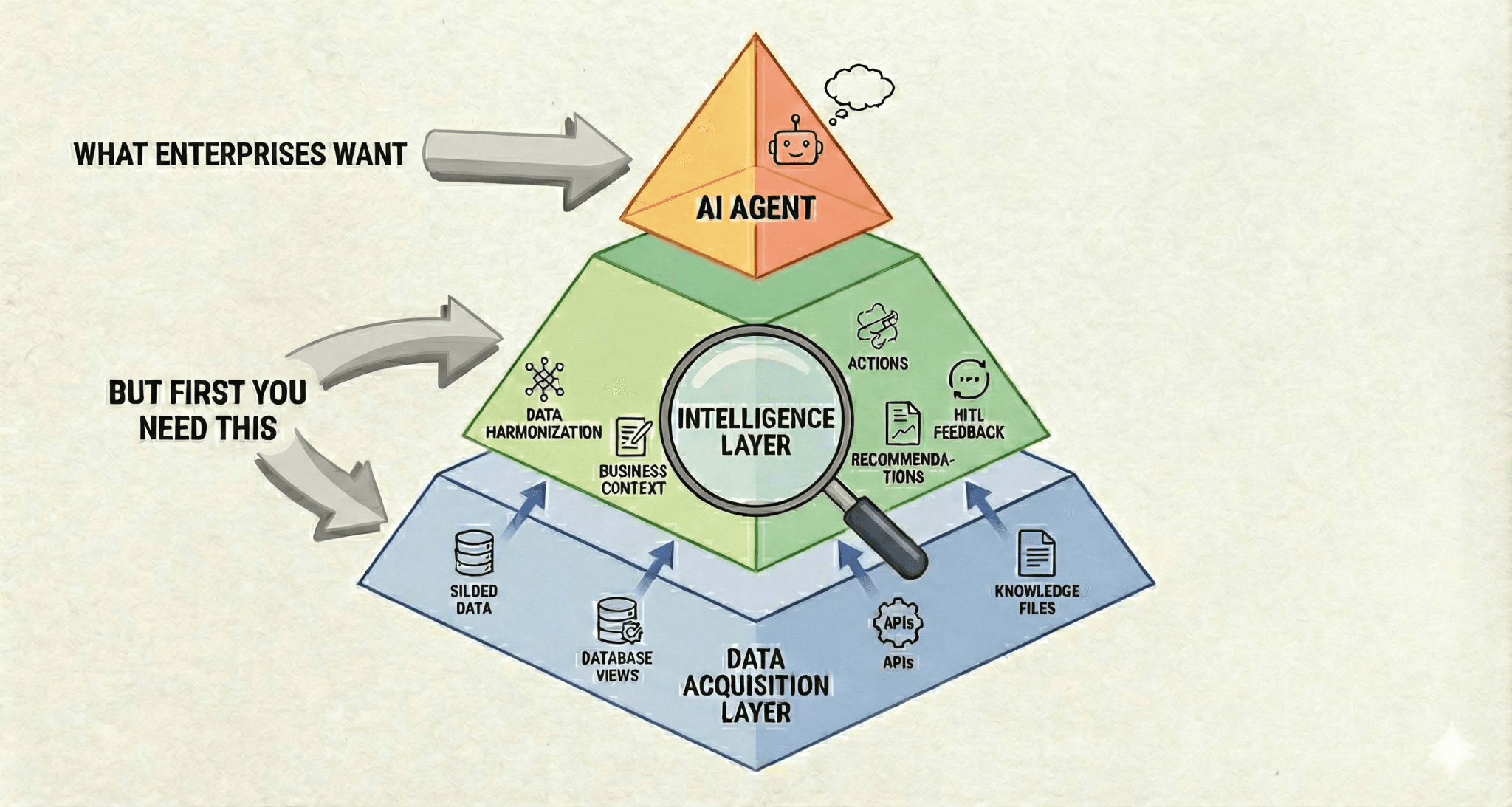

A simple way to think about this is the pyramid my friend Felipe, a forward deployed engineer with Google Cloud, shared: data acquisition at the base, an intelligence / context layer in the middle, and AI agents at the top.

Most people want to start at the top. The reality is: you can’t. But value isn’t also only available at the top–it’s available and ripe in the intelligence layer in the middle. The value just compounds.

Data acquisition is not the problem

Most solar teams already have the ingredients:

Monitoring / generation data

Weather data

Alerts / alarms

Work orders and maintenance history

Predicted model / 8760s

Financial models and budgets

Contractual terms (guarantees, SLAs, penalties, revenue assumptions)

The problem is that these “ingredients” aren’t packaged in a way that supports decisions. They live across too many tools, with inconsistent naming, inconsistent definitions, and too much noise.

So a very normal day for an asset manager or O&M coordinator becomes:

“Is this alert real?”

“Is it weather or equipment?”

“Has this been happening for weeks? Is this new?”

“What else is going on at this site?”

“Do we have an open work order?”

“Is this financially material or just annoying?”

“If we act today, what do we do first?”

Add to the hairball…

Consider one site against the portfolio / fleet of sites–their statuses, histories, SLAs

Employee knowledge and experience… institutional knowledge

Alert fatigue and desensitization after years and years of alert chaos

That work–the interpretation work–is the middle layer. And it’s exactly what gets skipped when teams rush to “agents and AI”

What the “intelligence/context layer” really means (in plain terms)

When I say “context,” I’m not talking about a fancy AI architecture diagram. I’m talking about the practical things that experienced solar teams already do–just manually, inconsistently, and often inside someone’s head.

Context is what turns a data point into a decision. It stitches together data points to create insights and tell the story… that “aha!” moment.

In solar operations, context usually includes:

History: Is this new, recurring, seasonal, or expected?

Comparisons: Is this site behaving differently than similar sites?

Causality cues: Does it correlate with weather, curtailment, or equipment behavior?

Operational state: Are technicians already scheduled? Is there an open ticket?

Business impact: Does this affect revenue, guarantees, customer trust, or financing covenants?

Priority rules: What should matter most this week given constraints?

If an AI system can’t “see” that context, it can still produce something—but it won’t feel right. It will feel like a smart intern: helpful sometimes, confidently wrong other times, and always needing supervision.

It’s akin to you trying to bake a stellar French silk mocha pie without all of the needed ingredients. Valiant effort. Some things may be right, but as a whole, it’s not what I ordered.

The overlooked value: this layer creates ROI before any agent

Here’s something that’s easy to miss when you’re staring at the shiny “agent” promise:

You can create real, material value before you automate anything.

When you build this middle layer well, teams typically see benefits like:

fewer alerts that require human attention

faster root-cause clarity (or at least better hypotheses)

fewer internal debates about what the data “means”

more consistent reporting to leadership and customers

better prioritization of time and truck rolls

less time stitching together information across systems

You can address some material people, process, and systems challenges you may already be facing through this contextual layer simply because contextual layers coalesces data together–visibility. It can unlock siloed perspectives and manual work on its own and solve challenges right away.

That’s not “AI someday” value. That’s immediate operational value. And in many organizations, it’s the difference between AI being a gimmick and AI being a serious capability. It’s also evolution along the analytics and operational effectiveness maturity curve. It brings value short and medium term while planning for the long term.

What “doing the work before agents” looks like

This is the part where people assume it’s a multi-year data lake project. It doesn’t have to be.

The goal is not to boil the ocean. The goal is to make progress on the specific jobs-to-be-done you care about.

In practice, this looks like:

Pick one or two high-value JTBD.

Example: “Prioritize which sites need attention today.” Or, “Explain underperformance in plain language for leadership.”Get brutally clear on definitions.

What counts as underperformance? What’s material vs noise? Who decides?Connect the minimum set of systems required.

Not everything. Just enough to make the output credible.Add business rules and institutional knowledge.

This is where you encode what your best operators already know.Create visibility + explanation first.

Before “action.” Before “autonomy.” Earn trust.

When teams skip these steps, the agent becomes a layer of confusion. When they do them, the agent becomes an amplifier.

Don’t forget that you have agents now–real people

One interesting point that does not get brought up often when leaders talk about their hopes and dreams of AI is people. The bigger macroeconomic questions abound around what happens with the workforce if agents become more prevalent.

However, at the organizational level, many leaders and even the knowledge workers today fail to address or acknowledge this piece. And these asset managers, O&M managers, asset coordinators, data analysts, etc. are agents today.

There’s a lot of focus on implementing AI technology but little in the way of upskilling current staff.

Part of the challenge existing staff have is the very challenge that a context layer can help with–addressing the disconnected, siloed data–and surfacing the contextual story to asset managers who can now focus their time on value-add work rather than data admin. O&M teams can cut to the chase on replacing that inverter that is now known to be showing high failure rates not just at one site but across the portfolio.

An intelligence and contextual layer starts the upskilling journey of the workplace by driving data-backed decisions at a scale not previously available because of the challenges shared in Part 1.

Important to remember the PEOPLE leg of the three-legged stool alongside process and systems.

Where this goes next

In Part 1, we talked about AI as a tool to accomplish jobs-to-be-done. In this post, we talked about the foundation that makes those jobs achievable with AI in the first place: turning data into context.

In Part 3, I’ll bring this down to a practical 2026 plan: how solar teams can move this forward this year without overcommitting, overbuilding, or waiting for the perfect future state.